Introduction

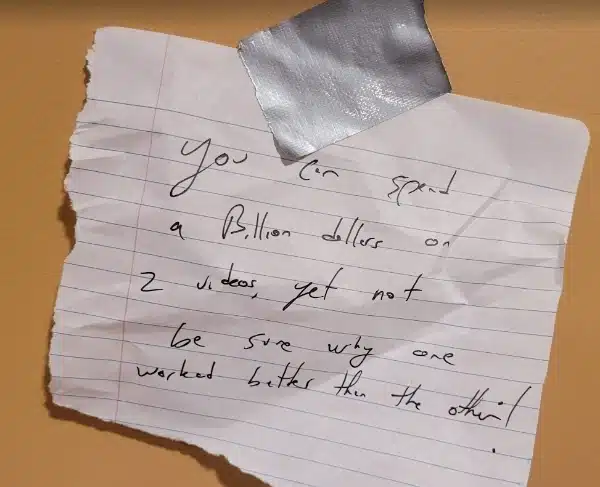

In the Videoquant office, a cheeky sign hangs on the wall, penned by founder Tim D’Auria. It reads, “You can spend a billion dollars on 2 videos, yet not be sure why one worked better than the other.” The sign serves as a humorous yet poignant reminder of the complex challenges that this start-up is solving.

The reason for this insight lies in the complexity of the video medium. In a world where video content rules, the stakes for creating winning videos have never been higher. Yet, despite big budgets and even bigger data, brands and content creators struggle to determine where to start and, once they start, the “why” behind a video’s success or failure. And the reason for these struggles is rooted in statistics.

The Paralysis of Choice & Why Unexplored Video Concepts Haunt Brand Strategy

The sheer number of choices facing brands and content creators in video strategy is staggering. One of the most critical, yet confounding, decisions revolves around the video’s messaging. Should the messaging be designed to inform, entertain, or persuade? Will it focus on the product’s unique features or take the viewer on a narrative journey that aligns with the brand’s core values?

While messaging serves as a pivotal example, it’s merely the tip of the iceberg. The multitude of other variables and options to consider can be equally impactful, each accompanied by its own set of alternatives that remain unexplored. In the absence of empirical data comparing these untried variations to those actually selected, brands face the daunting task of prioritizing what to produce and test.

This lack of comparative data turns the art of crafting a video strategy into a high-stakes guessing game. The weight of unmade choices hangs like a specter over each decision, resulting in a silent graveyard of missed opportunities and untapped potential. The absence of a methodology to empirically test these myriad alternatives keeps brands in a state of perpetual ‘what if,’ undermining confidence in their video strategies and making the path to video success frustratingly elusive.

The Common Misconception: We Can Determine What Works By Looking At Our Video Metrics

While starting with video is riddled with guessing that lends itself to underwhelming outcomes, the problem is equally challenging once marketers have some data in hand from videos they deployed. Many brands mistakenly believe that they can figure out what about their videos works or doesn’t using their own limited data. In fact, some measurement companies even give the wrongful impression that they can measure what attributes of your videos are driving outcomes. For example, video X has background music and video Y doesn’t. Video X did better, so background music must work better, right? Wrong! This approach neglects the multilayered complexity of video content and statistical control of variables, making it an invalid conclusion to draw with only this data.

The Achilles Heel To Video Optimization: Video Is A Complex Medium

The problem with video optimization is linked to two factors: (1) the cost to develop each video test and, more importantly, (2) the number of tests required to understand why one video works better than another. Unlike a medium like SEO or SEM where, for near $0 cost, one can come up with a keyword idea, launch an A/B test, and get an answer, video is a much more complicated medium with much higher costs.

Barrier 1: Video Cost per Test is Too High

First, the cost to develop video marketing is significantly higher than any other marketing medium. For example, developing a keyword for Search Engine Marketing (SEM) could be as simple as coming up with a word and entering it into Google Ads… done! Video content, on the other hand, starts with an idea that needs to be fleshed out into a coherent narrative developed into, quite literally, a motion picture. For even an inexpensive TV commercial test, the steps often include: idea development, script writing, storyboard creation, location scouting, equipment investment, crew employment, hiring actors, voice-over recording, music selection, sound design, animation or visual effects, legalities, copyright clearance, test screenings, and post-production. Compared to SEO or SEM, launching a simple TV test costs about 1800x more per test vs. these other marketing mediums.

However, the significant costs are not confined to television alone; YouTube and creators of social media videos encounter a comparable challenge. Even a solo YouTube content creator producing videos inexpensively from their parent’s basement faces the intricacies of video production, causing each video test to be significantly more costly than other marketing mediums.

While launching thousands of highly distinct SEM tests in a single day is feasible, attempting the same with video is an entirely different story . Furthermore, this isn’t about minor alterations like changing the background color or a text overlay; each video test must undergo substantial core changes to swiftly determine effective strategies, making the test cost a high barrier for even the largest brands.

Barrier 2: # of Videos Needed To Unwind What Works Exceeds Fortune 500 Budgets

For a valid test that gives us insight into what works and doesn’t for a video, the sample size required to gain insight isn’t how many impressions (views) each video has received. Rather, we need to look at impressions per video attribute combination. Attributes can include anything from messaging and talking points to the gender of actors to the tone of the voiceover to the specific type of product shown. The combination of these possibilities is astronomically large, making the number of unique videos and associated tests needed to unwind what works equally large. So large that no one brand, including those that spend $ Billions per year on marketing, can measure what about their videos are causing them to mostly fail and sometimes succeed.

Let’s consider two videos, A and B, and assume we’re only analyzing three attributes:

- Gender of the actor

- Tone of the voiceover

- Type of product shown

Even in this overly simplified example, the number of unique attribute combinations becomes enormous. When you multiply this across thousands of attributes, the true sample size needed to draw a statistically significant conclusion about why even 2 videos performed differently is daunting.

The Illusion of Big Budgets

Even if you were to spend a staggering $2 billion on A/B testing just two creatives, the information you gain about video would be quite limited. With so many attributes at play, pinpointing the exact combination that makes one creative outperform another is not feasible for any one brand or content creator. You could try changing one feature at a time, but how do you decide which features to test out of the millions of possibilities? The end result? A guessing game with exorbitant costs and dubious returns. This is why most brands never achieve success with video.

The Solution: Let Others Pay For Your Tests (enter Videoquant)

So how can this dilemma be resolved? No single brand or content creator can generate enough video tests at a low enough cost and fast enough to rule out what video content fails and what is likely to succeed. This dilemma is why the Videoquant (VQ) platform was founded—to mine data across millions of TV commercials and videos to identify tests already done and paid for by others, providing actionable insights that individual companies simply can’t arrive at on their own. Chances are, if you have an idea for a new video, it’s already been tried by someone else. We call this “competitor funded video R&D”, but it’s not limited to competitors. It takes us analyzing about 100K – 200K unique video creatives in our proprietary database before we even start to see statistical lift that can help us understand why a video works and another doesn’t.

Conclusion

Navigating the complex world of video content success is no small feat. With high development costs, an extensive variety of attributes to consider, and a lack of reliable metrics, achieving a winning video formula seems like hunting for a needle in a haystack. Even big-budget brands can’t tackle this complex equation alone. This is why Videoquant was formed, turning the tide in favor of data-driven decisions by aggregating a vast array of video data from across the industry. It’s only when we embrace such collective insights and recognize the inherent complexity of video that we can begin to demystify the elusive “why” behind video success or failure. So, the next time you find yourself lost in the numbers and permutations of video content, remember, the answer lies not just in big data, but in smart, collaborative data. We truly are better together.